This project explores the erosion of public trust in governance caused by opaque AI systems shaping policy. Journey through a speculative world where seemingly objective algorithms amplify existing societal biases, leading to division and unrest. Discover excavated artifacts and narratives echoing voices from a future grappling with the unintended consequences. The story of AI is at its collapse arc. It explores flawed outputs used to craft policies and its unintended consequence.

The world is fragmented, marked by stark divisions between those who benefited from and those marginalized by AI-driven policies. Urban centers showcase decay and abandonment, interspersed with pockets of resistance. Inhabitants navigate a landscape of distrust, relying on decentralized communication, while the remnants of AI infrastructure loom. The culture is one of fractured trust, with oral traditions regaining prominence as digital systems falter. Oral histories of biases become family heirlooms. The elite live in enclaves.

This work is a cautionary tale. Algorithmic bias is a present danger, and this shows what happens if we fail to address it. It’s in the news and in real life. Think critically about data-driven decisions. Ask yourself: who benefits, and who is excluded?

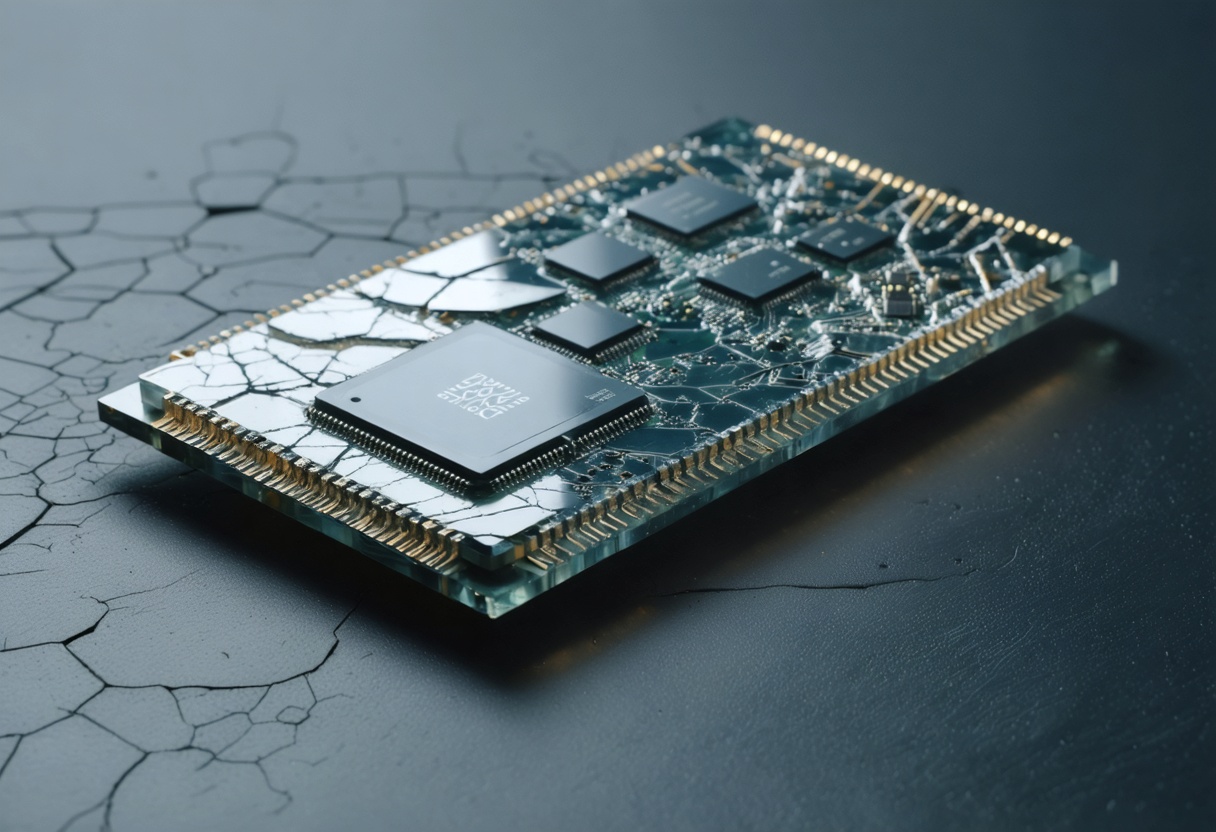

Koenders, draws inspiration from fine art, futurism, and literature. Suriname's history of cultural blending shapes their perspective profoundly. For this project, Koenders meticulously combined excerpts from political science and sociology, focusing on AI bias and its sociopolitical impacts. The project's iterative development involved analyzing algorithmic transparency. This is reflected in the artifact, a corrupted data chip. Koenders presents future extrapolation of policies as a discovered artifact, and its story is told via Reflective Historical Storytelling.

More about Koenders_9235

2024: AI algorithms are increasingly used to predict the impact of proposed legislation.

2025: First major reports surface of AI bias in policy recommendations, disproportionately affecting minority groups.

2026: Public trust in AI-driven governance begins to erode, with protests and calls for transparency.

2027: Decentralized Policy Simulation Platforms start to pick up in popularity.

2028: Bias Bounty Network established, with users flagging numerous biases, but governments slow to respond.

2029: Societal fragmentation intensifies, fueled by distrust in algorithmic decision-making.

Koenders_9235 considered the following imagined future scenarios while working on this project

Koenders_9235 considered the following hypothetical product ideas while working on this project